Yes, Virginia, There *Is* a Santa Claus Difference Between Web Frameworks in 2023

Contents

- Introduction

- The Test

- PHP/Laravel

- Pure PHP

- Revisiting Laravel

- Django

- Flask

- Starlette

- Node.js/ExpressJS

- Rust/Actix

- Technical Debt

- Resources

Introduction

After one of my most recent job interviews, I was surprised to realize that the company that I applied for was still using Laravel, a PHP framework that I tried about a decade ago. It was decent for the time, but if there's one constant in technology and fashion alike, it's continual change and resurfacing of styles and concepts. If you're a JavaScript programmer, you're probably familiar with this old joke

Programmer 1: "I don't like this new JavaScript framework!"

Programmer 2: "No need to worry. Just wait six months and there will be another one to replace it!"

Out of curiosity, I decided to see exactly what happens when we put old and new to the test. Of course, the web is filled with benchmarks and claims, of which the most popular is probably the TechEmpower Web Framework Benchmarks here . We're not going to do anything nearly as complicated as them today though. We'll keep things nice and simple both so that this article won't turn into War and Peace , and that you'll have a slight chance of staying awake by the time that you're done reading. The usual caveats apply: this might not work the same on your machine, different software versions can affect performance, and Schrödinger's cat actually became a zombie cat who was half alive and half dead at the exact same time.

The Test

Testing Environment

For this test, I'll be using my laptop armed with a puny i5 running Manjaro Linux as shown here.

╰─➤ uname -a

Linux jimsredmi 5.10.174-1-MANJARO #1 SMP PREEMPT Tuesday Mar 21 11:15:28 UTC 2023 x86_64 GNU/Linux

╰─➤ cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 126

model name : Intel(R) Core(TM) i5-1035G1 CPU @ 1.00GHz

stepping : 5

microcode : 0xb6

cpu MHz : 990.210

cache size : 6144 KB

The Task at Hand

Our code will have three simple tasks for each request:

- Read the current user's session ID from a cookie

- Load additional information from a database

- Return that information to the user

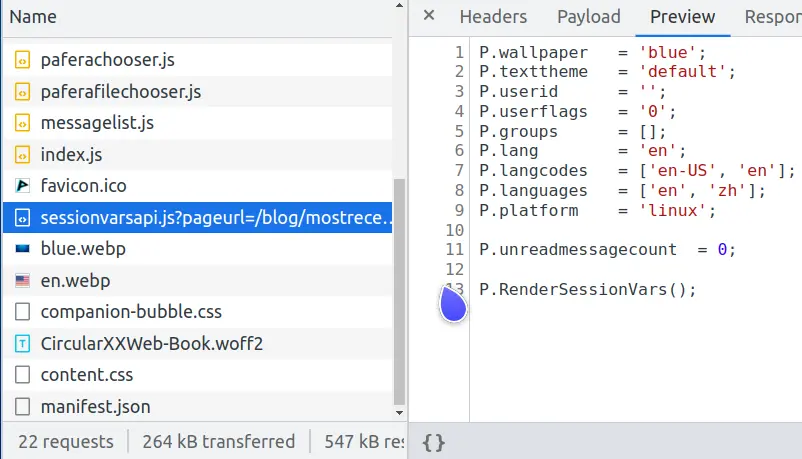

What kind of an idiotic test is that, you might ask? Well, if you look at the network requests for this page, you'll notice one called sessionvars.js that does the exact same thing.

You see, modern web pages are complicated creatures, and one of the most common tasks is caching complex pages to avoid excess load on the database server.

If we re-render a complex page every time a user requests it, then we can only serve about 600 users per second.

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1/system/index.en.html

Running 10s test @ http://127.0.0.1/system/index.en.html

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 186.83ms 174.22ms 1.06s 81.16%

Req/Sec 166.11 58.84 414.00 71.89%

6213 requests in 10.02s, 49.35MB read

Requests/sec: 619.97

Transfer/sec: 4.92MB

But if we cache this page as a static HTML file and let Nginx quickly toss it out the window to the user, then we can serve 32,000 users per second, increasing performance by a factor of 50x.

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1/system/index.en.html

Running 10s test @ http://127.0.0.1/system/index.en.html

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 3.03ms 511.95us 6.87ms 68.10%

Req/Sec 8.20k 1.15k 28.55k 97.26%

327353 requests in 10.10s, 2.36GB read

Requests/sec: 32410.83

Transfer/sec: 238.99MB

The static index.en.html is the part that goes to everyone, and only the parts that differ by user are sent in sessionvars.js. This not only reduces database load and creates a better experience for our users, but also decreases the quantum probabilities that our server will spontaneously vaporize in a warp core breach when the Klingons attack.

Code Requirements

The returned code for each framework will have one simple requirement: show the user how many times they've refreshed the page by saying "Count is x". To keep things simple, we'll stay away from Redis queues, Kubernetes components, or AWS Lambdas for now.

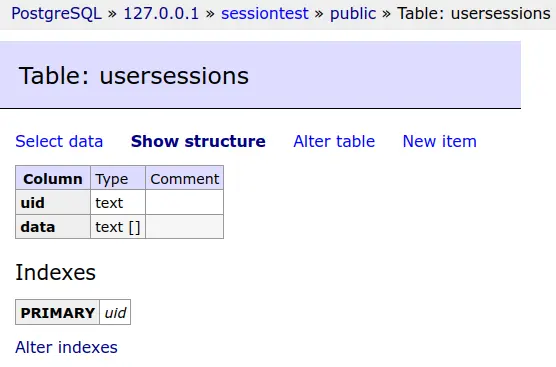

Each user's session data will be saved in a PostgreSQL database.

And this database table will be truncated before each test.

Simple yet effective is the Pafera motto... outside of the darkest timeline anyways...

The Actual Test Results

PHP/Laravel

Okay, so now we can finally start getting our hands dirty. We'll skip the setup for Laravel since it's just a bunch of composer and artisan commands.

First, we'll setup our database settings in the .env file

DB_CONNECTION=pgsql

DB_HOST=127.0.0.1

DB_PORT=5432

DB_DATABASE=sessiontest

DB_USERNAME=sessiontest

DB_PASSWORD=sessiontest

Then we'll set one single fallback route that sends every request to our controller.

Route::fallback(SessionController::class);

And set the controller to display the count. Laravel, by default, stores sessions in the database. It also provides the session() function to interface with our session data, so all it took was a couple of lines of code to render our page.

class SessionController extends Controller

{

public function __invoke(Request $request)

{

$count = session('count', 0);

$count += 1;

session(['count' => $count]);

return 'Count is ' . $count;

}

}

After setting up php-fpm and Nginx, our page looks pretty good...

╰─➤ php -v

PHP 8.2.2 (cli) (built: Feb 1 2023 08:33:04) (NTS)

Copyright (c) The PHP Group

Zend Engine v4.2.2, Copyright (c) Zend Technologies

with Xdebug v3.2.0, Copyright (c) 2002-2022, by Derick Rethans

╰─➤ sudo systemctl restart php-fpm

╰─➤ sudo systemctl restart nginx

At least until we actually see the test results...

PHP/Laravel

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1

Running 10s test @ http://127.0.0.1

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.08s 546.33ms 1.96s 65.71%

Req/Sec 12.37 7.28 40.00 56.64%

211 requests in 10.03s, 177.21KB read

Socket errors: connect 0, read 0, write 0, timeout 176

Requests/sec: 21.04

Transfer/sec: 17.67KB

No, that is not a typo. Our test machine has gone from 600 requests per second rendering a complex page... to 21 requests per second rendering "Count is 1".

So what went wrong? Is something wrong with our PHP installation? Is Nginx somehow slowing down when interfacing with php-fpm?

Pure PHP

Let's redo this page in pure PHP code.

<?php

// ====================================================================

function uuid4()

{

return sprintf(

'%04x%04x-%04x-%04x-%04x-%04x%04x%04x',

mt_rand(0, 0xffff), mt_rand(0, 0xffff),

mt_rand(0, 0xffff),

mt_rand(0, 0x0fff) | 0x4000,

mt_rand(0, 0x3fff) | 0x8000,

mt_rand(0, 0xffff), mt_rand(0, 0xffff), mt_rand(0, 0xffff)

);

}

// ====================================================================

function Query($db, $query, $params = [])

{

$s = $db->prepare($query);

$s->setFetchMode(PDO::FETCH_ASSOC);

$s->execute(array_values($params));

return $s;

}

// ********************************************************************

session_start();

$sessionid = 0;

if (isset($_SESSION['sessionid']))

{

$sessionid = $_SESSION['sessionid'];

}

if (!$sessionid)

{

$sessionid = uuid4();

$_SESSION['sessionid'] = $sessionid;

}

$db = new PDO('pgsql:host=127.0.0.1 dbname=sessiontest user=sessiontest password=sessiontest');

$data = 0;

try

{

$result = Query(

$db,

'SELECT data FROM usersessions WHERE uid = ?',

[$sessionid]

)->fetchAll();

if ($result)

{

$data = json_decode($result[0]['data'], 1);

}

} catch (Exception $e)

{

echo $e;

Query(

$db,

'CREATE TABLE usersessions(

uid TEXT PRIMARY KEY,

data TEXT

)'

);

}

if (!$data)

{

$data = ['count' => 0];

}

$data['count']++;

if ($data['count'] == 1)

{

Query(

$db,

'INSERT INTO usersessions(uid, data)

VALUES(?, ?)',

[$sessionid, json_encode($data)]

);

} else

{

Query(

$db,

'UPDATE usersessions

SET data = ?

WHERE uid = ?',

[json_encode($data), $sessionid]

);

}

echo 'Count is ' . $data['count'];

We have now used 98 lines of code to do what four lines of code (and a whole bunch of configuration work) in Laravel did. (Of course, if we did proper error handling and user facing messages, this would be about twice the number of lines.) Perhaps we can make it to 30 requests per second?

PHP/Pure PHP

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1

Running 10s test @ http://127.0.0.1

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 140.79ms 27.88ms 332.31ms 90.75%

Req/Sec 178.63 58.34 252.00 61.01%

7074 requests in 10.04s, 3.62MB read

Requests/sec: 704.46

Transfer/sec: 369.43KB

Whoa! It looks like there's nothing wrong with our PHP installation after all. The pure PHP version is doing 700 requests per second.

If there's nothing wrong with PHP, perhaps we misconfigured Laravel?

Revisiting Laravel

After scouring the web for configuration issues and performance tips, two of the most popular techniques were to cache the config and route data to avoid processing them for every request. Therefore, we will take their advice and try these tips out.

╰─➤ php artisan config:cache

INFO Configuration cached successfully.

╰─➤ php artisan route:cache

INFO Routes cached successfully.

Everything looks good on the command line. Let's redo the benchmark.

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1

Running 10s test @ http://127.0.0.1

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.13s 543.50ms 1.98s 61.90%

Req/Sec 25.45 13.39 50.00 55.77%

289 requests in 10.04s, 242.15KB read

Socket errors: connect 0, read 0, write 0, timeout 247

Requests/sec: 28.80

Transfer/sec: 24.13KB

Well, we have now increased performance from 21.04 to 28.80 request per second, a dramatic uplift of almost 37%! This would be quite impressive for any software package... except for the fact that we're still only doing 1/24th of the number of requests of the pure PHP version.

If you're thinking that something must be wrong with this test, you should talk with the author of the Lucinda PHP framework. In his test results, he has Lucinda beating Laravel by 36x for HTML requests and 90x for JSON requests.

After testing on my own machine with both Apache and Nginx, I have no reason to doubt him. Laravel is really just that slow! PHP by itself is not that bad, but once you add in all of the extra processing that Laravel adds to each request, then I find it very difficult to recommend Laravel as a choice in 2023.

Django

PHP/Wordpress accounts for about 40% of all websites on the web , making it by far the most dominant framework. Personally though, I find that popularity does not necessarily translate into quality any more than I find myself having an sudden uncontrollable urge for that extraordinary gourmet food from the most popular restaurant in the world ... McDonald's. Since we've already tested pure PHP code, we're not going to test Wordpress itself, as anything involving Wordpress would undoubtedly be lower than the 700 requests per second that we observed with pure PHP.

Django is another popular framework that has been around for a long time. If you've used it in the past, you're probably fondly remembering its spectacular database administration interface along with how annoying it was to configure everything just the way that you wanted. Let's see how well Django works in 2023, especially with the new ASGI interface that it has added as of version 4.0.

Setting up Django is remarkably similar to setting up Laravel, as they were both from the age where MVC architectures were stylish and correct. We'll skip the boring configuration and go straight to setting up the view.

from django.shortcuts import render

from django.http import HttpResponse

# =====================================================================

def index(request):

count = request.session.get('count', 0)

count += 1

request.session['count'] = count

return HttpResponse(f"Count is {count}")

Four lines of code is the same as with the Laravel version. Let's see how it performs.

╰─➤ python --version

Python 3.10.9

Python/Django

╰─➤ gunicorn --access-logfile - -k uvicorn.workers.UvicornWorker -w 4 djangotest.asgi

[2023-03-21 15:20:38 +0800] [2886633] [INFO] Starting gunicorn 20.1.0

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8000/sessiontest/

Running 10s test @ http://127.0.0.1:8000/sessiontest/

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 277.71ms 142.84ms 835.12ms 69.93%

Req/Sec 91.21 57.57 230.00 61.04%

3577 requests in 10.06s, 1.46MB read

Requests/sec: 355.44

Transfer/sec: 148.56KB

Not bad at all at 355 requests per second. It's only half the performance of the pure PHP version, but it's also 12x that of the Laravel version. Django vs. Laravel seems to be no contest at all.

Flask

Apart from the larger everything-including-the-kitchen-sink frameworks, there are also smaller frameworks that just do some basic setup while letting you handle the rest. One of the best ones to use is Flask and its ASGI counterpart Quart. My own PaferaPy Framework is built on top of Flask, so I'm well acquainted with how easy it is to get things done while maintaining performance.

#!/usr/bin/python3

# -*- coding: utf-8 -*-

#

# Session benchmark test

import json

import psycopg

import uuid

from flask import Flask, session, redirect, url_for, request, current_app, g, abort, send_from_directory

from flask.sessions import SecureCookieSessionInterface

app = Flask('pafera')

app.secret_key = b'secretkey'

dbconn = 0

# =====================================================================

@app.route('/', defaults={'path': ''}, methods = ['GET', 'POST'])

@app.route('/<path:path>', methods = ['GET', 'POST'])

def index(path):

"""Handles all requests for the server.

We route all requests through here to handle the database and session

logic in one place.

"""

global dbconn

if not dbconn:

dbconn = psycopg.connect('dbname=sessiontest user=sessiontest password=sessiontest')

cursor = dbconn.execute('''

CREATE TABLE IF NOT EXISTS usersessions(

uid TEXT PRIMARY KEY,

data TEXT

)

''')

cursor.close()

dbconn.commit()

sessionid = session.get('sessionid', 0)

if not sessionid:

sessionid = uuid.uuid4().hex

session['sessionid'] = sessionid

cursor = dbconn.execute("SELECT data FROM usersessions WHERE uid = %s", [sessionid])

row = cursor.fetchone()

count = json.loads(row[0])['count'] if row else 0

count += 1

newdata = json.dumps({'count': count})

if count == 1:

cursor.execute("""

INSERT INTO usersessions(uid, data)

VALUES(%s, %s)

""",

[sessionid, newdata]

)

else:

cursor.execute("""

UPDATE usersessions

SET data = %s

WHERE uid = %s

""",

[newdata, sessionid]

)

cursor.close()

dbconn.commit()

return f'Count is {count}'

As you can see, the Flask script is shorter than the pure PHP script. I find that out of all of the languages that I've used, Python is probably the most expressive language in terms of keystrokes typed. Lack of braces and parentheses, list and dict comprehensions, and blocking based upon indentation rather than semicolons make Python rather simple yet powerful in its capabilities.

Unfortunately, Python is also the slowest general purpose language out there, despite how much software has been written in it. The number of Python libraries available is about four times more than similar languages and covers a vast amount of domains, yet no one would say that Python is speedy nor performant outside of niches like NumPy.

Let's see how our Flask version compares to our previous frameworks.

Python/Flask

╰─➤ gunicorn --access-logfile - -w 4 flasksite:app

[2023-03-21 15:32:49 +0800] [2856296] [INFO] Starting gunicorn 20.1.0

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8000

Running 10s test @ http://127.0.0.1:8000

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 91.84ms 11.97ms 149.63ms 86.18%

Req/Sec 272.04 39.05 380.00 74.50%

10842 requests in 10.04s, 3.27MB read

Requests/sec: 1080.28

Transfer/sec: 333.37KB

Our Flask script is actually faster than our pure PHP version!

If you're surprised by this, you should realize that our Flask app does all of its initialization and configuration when we start up the gunicorn server, while PHP re-executes the script every time a new request comes in. It's equivalent to Flask being the young, eager taxi driver who's already started the car and is waiting beside the road, while PHP is the old driver who stays at his house waiting for a call to come in and only then drives over to pick you up. Being an old school guy and coming from the days where PHP was a wonderful change to plain HTML and SHTML files, it's a bit sad to realize how much time has passed by, but the design differences really make it hard for PHP to compete against Python, Java, and Node.js servers who just stay in memory and handles request with the nimble ease of a juggler.

Starlette

Flask might be our fastest framework so far, but it's actually pretty old software. The Python community switched to the newer asychronous ASGI servers a couple of years back, and of course, I myself have switched along with them.

The newest version of the Pafera Framework, PaferaPyAsync , is based upon Starlette. Although there is an ASGI version of Flask called Quart, the performance differences between Quart and Starlette were enough for me to rebase my code upon Starlette instead.

Asychronous programming can be frightening to a lot of people, but it's actually not a difficult concept thanks to the Node.js guys popularizing the concept over a decade ago.

We used to fight concurrency with multithreading, multiprocessing, distributed computing, promise chaining, and all of those fun times that prematurely aged and desiccated many veteran programmers. Now, we just type async in front of our functions and await in front of any code that might take a while to execute. It is indeed more verbose than regular code, but much less annoying to use than having to deal with synchronization primitives, message passing, and resolving promises.

Our Starlette file looks like this:

#!/usr/bin/python3

# -*- coding: utf-8 -*-

#

# Session benchmark test

import json

import uuid

import psycopg

from starlette.applications import Starlette

from starlette.responses import Response, PlainTextResponse, JSONResponse, RedirectResponse, HTMLResponse

from starlette.routing import Route, Mount, WebSocketRoute

from starlette_session import SessionMiddleware

dbconn = 0

# =====================================================================

async def index(R):

global dbconn

if not dbconn:

dbconn = await psycopg.AsyncConnection.connect('dbname=sessiontest user=sessiontest password=sessiontest')

cursor = await dbconn.execute('''

CREATE TABLE IF NOT EXISTS usersessions(

uid TEXT PRIMARY KEY,

data TEXT

)

''')

await cursor.close()

await dbconn.commit()

sessionid = R.session.get('sessionid', 0)

if not sessionid:

sessionid = uuid.uuid4().hex

R.session['sessionid'] = sessionid

cursor = await dbconn.execute("SELECT data FROM usersessions WHERE uid = %s", [sessionid])

row = await cursor.fetchone()

count = json.loads(row[0])['count'] if row else 0

count += 1

newdata = json.dumps({'count': count})

if count == 1:

await cursor.execute("""

INSERT INTO usersessions(uid, data)

VALUES(%s, %s)

""",

[sessionid, newdata]

)

else:

await cursor.execute("""

UPDATE usersessions

SET data = %s

WHERE uid = %s

""",

[newdata, sessionid]

)

await cursor.close()

await dbconn.commit()

return PlainTextResponse(f'Count is {count}')

# *********************************************************************

app = Starlette(

debug = True,

routes = [

Route('/{path:path}', index, methods = ['GET', 'POST']),

],

)

app.add_middleware(

SessionMiddleware,

secret_key = 'testsecretkey',

cookie_name = "pafera",

)

As you can see, it's pretty much copied and pasted from our Flask script with only a couple of routing changes and the async/await keywords.

How much improvement can copy and pasted code really give us?

Python/Starlette

╰─➤ gunicorn --access-logfile - -k uvicorn.workers.UvicornWorker -w 4 starlettesite:app 130 ↵

[2023-03-21 15:42:34 +0800] [2856220] [INFO] Starting gunicorn 20.1.0

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8000

Running 10s test @ http://127.0.0.1:8000

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 21.85ms 10.45ms 67.29ms 55.18%

Req/Sec 1.15k 170.11 1.52k 66.00%

45809 requests in 10.04s, 13.85MB read

Requests/sec: 4562.82

Transfer/sec: 1.38MB

We have a new champion, ladies and gentlemen! Our previous high was our pure PHP version at 704 requests per second, which was then overtaken by our Flask version at 1080 requests per second. Our Starlette script crushes all previous contenders at 4562 requests per second, meaning a 6x improvement over pure PHP and 4x improvement over Flask.

If you haven't changed your WSGI Python code over to ASGI yet, now might be a good time to start.

Node.js/ExpressJS

So far, we've only covered PHP and Python frameworks. However, a large portion of the world actually use Java, DotNet, Node.js, Ruby on Rails, and other such technologies for their websites. This is by no means a comprehensive overview of all of the world's ecosystems and biomes, so to avoid doing the programming equivalent of organic chemistry, we'll choose only the frameworks that are easiest to type code for... of which Java is definitely not.

Unless you've been hiding underneath your copy of K&R C or Knuth's The Art of Computer Programming for the last fifteen years, you've probably heard of Node.js. Those of us who have been around since the beginning of JavaScript are either incredibly frightened, amazed, or both at the state of modern JavaScript, but there's no denying that JavaScript has become a force to be reckoned with on servers as well as browsers. After all, we even have native 64 bit integers now in the language! That's far better than everything being stored in 64 bit floats by far!

ExpressJS is probably the easiest Node.js server to use, so we'll do a quick and dirty Node.js/ExpressJS app to serve our counter.

/**********************************************************************

* Simple session test using ExpressJS.

**********************************************************************/

var L = console.log;

var uuid = require('uuid4');

var express = require('express');

var session = require('express-session');

var MemoryStore = require('memorystore')(session);

var { Client } = require('pg')

var db = 0;

var app = express();

const PORT = 8000;

//session middleware

app.use(

session({

secret: "secretkey",

saveUninitialized: true,

resave: false,

store: new MemoryStore({

checkPeriod: 1000 * 60 * 60 * 24 // prune expired entries every 24h

})

})

);

app.get('/',

async function(req,res)

{

if (!db)

{

db = new Client({

user: 'sessiontest',

host: '127.0.0.1',

database: 'sessiontest',

password: 'sessiontest'

});

await db.connect();

await db.query(`

CREATE TABLE IF NOT EXISTS usersessions(

uid TEXT PRIMARY KEY,

data TEXT

)`,

[]

);

};

var session = req.session;

if (!session.sessionid)

{

session.sessionid = uuid();

}

var row = 0;

let queryresult = await db.query(`

SELECT data::TEXT

FROM usersessions

WHERE uid = $1`,

[session.sessionid]

);

if (queryresult && queryresult.rows.length)

{

row = queryresult.rows[0].data;

}

var count = 0;

if (row)

{

var data = JSON.parse(row);

data.count += 1;

count = data.count;

await db.query(`

UPDATE usersessions

SET data = $1

WHERE uid = $2

`,

[JSON.stringify(data), session.sessionid]

);

} else

{

await db.query(`

INSERT INTO usersessions(uid, data)

VALUES($1, $2)`,

[session.sessionid, JSON.stringify({count: 1})]

);

count = 1;

}

res.send(`Count is ${count}`);

}

);

app.listen(PORT, () => console.log(`Server Running at port ${PORT}`));

This code was actually easier to write than the Python versions, although native JavaScript gets rather unwieldy when applications become larger, and all attempts to correct this such as TypeScript quickly become more verbose than Python.

Let's see how this performs!

Node.js/ExpressJS

╰─➤ node --version v19.6.0

╰─➤ NODE_ENV=production node nodejsapp.js 130 ↵

Server Running at port 8000

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8000

Running 10s test @ http://127.0.0.1:8000

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 90.41ms 7.20ms 188.29ms 85.16%

Req/Sec 277.15 37.21 393.00 81.66%

11018 requests in 10.02s, 3.82MB read

Requests/sec: 1100.12

Transfer/sec: 390.68KB

You may have heard ancient (ancient by Internet standards anyways...) folktales about Node.js' speed, and those stories are mostly true thanks to the spectacular work that Google has done with the V8 JavaScript engine. In this case though, although our quick app outperforms the Flask script, its single threaded nature is defeated by the four async processes wielded by the Starlette Knight who says "Ni!".

Let's get some more help!

╰─➤ pm2 start nodejsapp.js -i 4

[PM2] Spawning PM2 daemon with pm2_home=/home/jim/.pm2

[PM2] PM2 Successfully daemonized

[PM2] Starting /home/jim/projects/paferarust/nodejsapp.js in cluster_mode (4 instances)

[PM2] Done.

┌────┬──────────────┬─────────────┬─────────┬─────────┬──────────┬────────┬──────┬───────────┬──────────┬──────────┬──────────┬──────────┐

│ id │ name │ namespace │ version │ mode │ pid │ uptime │ ↺ │ status │ cpu │ mem │ user │ watching │

├────┼──────────────┼─────────────┼─────────┼─────────┼──────────┼────────┼──────┼───────────┼──────────┼──────────┼──────────┼──────────┤

│ 0 │ nodejsapp │ default │ N/A │ cluster │ 37141 │ 0s │ 0 │ online │ 0% │ 64.6mb │ jim │ disabled │

│ 1 │ nodejsapp │ default │ N/A │ cluster │ 37148 │ 0s │ 0 │ online │ 0% │ 64.5mb │ jim │ disabled │

│ 2 │ nodejsapp │ default │ N/A │ cluster │ 37159 │ 0s │ 0 │ online │ 0% │ 56.0mb │ jim │ disabled │

│ 3 │ nodejsapp │ default │ N/A │ cluster │ 37171 │ 0s │ 0 │ online │ 0% │ 45.3mb │ jim │ disabled │

└────┴──────────────┴─────────────┴─────────┴─────────┴──────────┴────────┴──────┴───────────┴──────────┴──────────┴──────────┴──────────┘

Okay! Now it's an even four on four battle! Let's benchmark!

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8000

Running 10s test @ http://127.0.0.1:8000

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 45.09ms 19.89ms 176.14ms 60.22%

Req/Sec 558.93 97.50 770.00 66.17%

22234 requests in 10.02s, 7.71MB read

Requests/sec: 2218.69

Transfer/sec: 787.89KB

Still not quite at the level of Starlette, but it's not bad for a quick five minute JavaScript hack. From my own testing, this script is actually being held back a bit at the database interfacing level because node-postgres is nowhere near as efficient as psycopg is for Python. Switching to sqlite as the database driver yields over 3000 requests per second for the same ExpressJS code.

The main thing to note is that despite the slow execution speed of Python, ASGI frameworks can actually be competitive with Node.js solutions for certain workloads.

Rust/Actix

So now, we're getting closer to the top of the mountain, and by mountain, I mean the highest benchmark scores recorded by mice and men alike.

If you look at most of the framework benchmarks available on the web, you'll notice that there are two languages that tend to dominate the top: C++ and Rust. I've worked with C++ since the 90s, and I even had my own Win32 C++ framework back before MFC/ATL was a thing, so I have a lot of experience with the language. It's not much fun to work with something when you already know it, so we're going to do a Rust version instead. ;)

Rust is relatively new as far as programming languages go, but it became an object of curiousity for me when Linus Torvalds announced that he would accept Rust as a Linux kernel programming language. For us older programmers, that's about the same as saying that this new fangled new age hippie thingie was going to be a new amendment to the U.S. Constitution.

Now, when you're an experienced programmer, you tend not to jump on the bandwagon as fast as the younger folks do, or else you might get burned by rapid changes to the language or libraries. (Anyone who used the first version of AngularJS will know what I'm talking about.) Rust is still somewhat in that experimental development stage, and I find it funny that that many code examples on the web don't even compile anymore with current versions of packages.

However, the performance shown by Rust applications cannot be denied. If you've never tried ripgrep or fd-find out on large source code trees, you should definitely give them a spin. They're even available for most Linux distributions simply from the package manager. You're exchanging verbosity for performance with Rust... a lot of verbosity for a lot of performance.

The complete code for Rust is a bit large, so we'll just take a look at the relevant handlers here:

// =====================================================================

pub async fn RunQuery(

db: &web::Data<Pool>,

query: &str,

args: &[&(dyn ToSql + Sync)]

) -> Result<Vec<tokio_postgres::row::Row>, tokio_postgres::Error>

{

let client = db.get().await.unwrap();

let statement = client.prepare_cached(query).await.unwrap();

client.query(&statement, args).await

}

// =====================================================================

pub async fn index(

req: HttpRequest,

session: Session,

db: web::Data<Pool>,

) -> Result<HttpResponse, Error>

{

let mut count = 1;

if let Some(sessionid) = session.get::<String>("sessionid")?

{

let rows = RunQuery(

&db,

"SELECT data

FROM usersessions

WHERE uid = $1",

&[&sessionid]

).await.unwrap();

if rows.is_empty()

{

let jsondata = serde_json::json!({

"count": 1,

}).to_string();

RunQuery(

&db,

"INSERT INTO usersessions(uid, data)

VALUES($1, $2)",

&[&sessionid, &jsondata]

).await

.expect("Insert failed!");

} else

{

let jsonstring:&str = rows[0].get(0);

let countdata: CountData = serde_json::from_str(jsonstring)?;

count = countdata.count;

count += 1;

let jsondata = serde_json::json!({

"count": count,

}).to_string();

RunQuery(

&db,

"UPDATE usersessions

SET data = $1

WHERE uid = $2

",

&[&jsondata, &sessionid]

).await

.expect("Update failed!");

}

} else

{

let sessionid = Uuid::new_v4().to_string();

let jsondata = serde_json::json!({

"count": 1,

}).to_string();

RunQuery(

&db,

"INSERT INTO usersessions(uid, data)

VALUES($1, $2)",

&[&sessionid, &jsondata]

).await

.expect("Insert failed!");

session.insert("sessionid", sessionid)?;

}

Ok(HttpResponse::Ok().body(format!(

"Count is {:?}",

count

)))

}

This is much more complicated than the Python/Node.js versions...

Rust/Actix

╰─➤ cargo run --release

[2023-03-21T23:37:25Z INFO actix_server::builder] starting 4 workers

Server running at http://127.0.0.1:8888/

╰─➤ wrk -d 10s -t 4 -c 100 http://127.0.0.1:8888

Running 10s test @ http://127.0.0.1:8888

4 threads and 100 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 9.93ms 3.90ms 77.18ms 94.87%

Req/Sec 2.59k 226.41 2.83k 89.25%

102951 requests in 10.03s, 24.59MB read

Requests/sec: 10267.39

Transfer/sec: 2.45MB

And much more performant!

Our Rust server using Actix/deadpool_postgres handily beats our previous champion Starlette by +125%, ExpressJS by +362%, and pure PHP by +1366%. (I'll leave the performance delta with the Laravel version as an exercise for the reader.)

I've found that learning the Rust language itself has been more difficult than other languages since it has many more gotchas than anything I've seen outside of 6502 Assembly, but if your Rust server can take on 14x the number of users as your PHP server, then perhaps there's something to be gained with switching technologies after all. That is why the next version of the Pafera Framework will be based upon Rust. The learning curve is much higher than scripting languages, but the performance will be worth it. If you can't put the time in to learn Rust, then basing your tech stack on Starlette or Node.js is not a bad decision either.

Technical Debt

In the last twenty years, we've gone from cheap static hosting sites to shared hosting with LAMP stacks to renting VPSes to AWS, Azure, and other cloud services. Nowadays, many companies are satisfied with making design decisions based upon whomever they can find that's available or cheapest since the advent of convenient cloud services have made it easy to throw more hardware at slow servers and applications. This has given them great short term gains at the cost of long term technical debt.

70 years ago, there was a great space race between the Soviet Union and the United States. The Soviets won most of the early milestones. They had the first satellite in Sputnik, the first dog in space in Laika, the first moon spacecraft in Luna 2, the first man and woman in space in Yuri Gagarin and Valentina Tereshkova, and so forth...

But they were slowly accumulating technical debt.

Although the Soviets were first to each of these achievements, their engineering processes and goals were causing them to focus on short term challenges rather than long term feasibility. They won each time they leaped, but they were getting more tired and slower while their opponents continued to take consistent strides toward the finish line.

Once Neil Armstrong took his historic steps on the moon on live television, the Americans took the lead, and then stayed there as the Soviet program faltered. This is no different than companies today who have focused on the next big thing, the next big payoff, or the next big tech while failing to develop proper habits and strategies for the long haul.

Being first to market does not mean that you will become the dominant player in that market. Alternatively, taking the time to do things right does not guarantee success, but certainly increases your chances of long term achievements. If you're the tech lead for your company, choose the right direction and tools for your workload. Don't let popularity replace performance and efficiency.

Resources

Want to download a 7z file containing the Rust, ExpressJS, Flask, Starlette, and Pure PHP scripts?

About the Author |

|

|

Jim has been programming since he got an IBM PS/2 back during the 90s. To this day, he still prefers writing HTML and SQL by hand, and focuses on efficiency and correctness in his work. |